Software Performance Guide: AWS Lambdas

10 minute(s)

In this article I'm going to provide a deep-dive into creating performance AWS Serverless architecture and testing AWS Lambdas

Introduction

A common misconception about serverless computing is that it means not using a server at all. In reality, 'serverless' means that you don't manage the server; instead, the cloud provider handles it for you. This distinction is crucial as that misconception leads to a whole heap of problems, often times with system performance.

In my opinion AWS can sometimes be unclear about the details of an offering. I'm not sure for other providers such as Azure functions this remains the same but I presume some of the problems may be shared, This article will cover common pitfalls, how to configure lambdas.

Lambdas

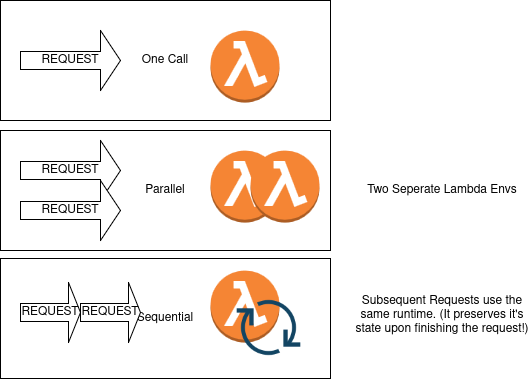

Lambdas are individual runtimes you borrow that you can use for a period of time up to 15 minutes, if two requests come in at the same time, you get two runtimes, three at the same time? you get three. Up to a configurable limit or 1000 by default. If requests happen one after another you use the first one twice. They dont end immediately after finishing you provision the underlying runtime for a bit and after that time they're gone.

This is actually the best part of lambdas and where it gets the 'autoscaling' from, the more you call the more lambdas you get!

Each Lambda function operates within its own isolated environment, having its own dedicated resources. While it utilizes containerization under the hood, you don't need to worry about the technical specifics—just think of each Lambda as its own independent machine with very limited configuration optionsHopefully the diagram conveys the basics

As you can imagine, hit it too hard and it can lead to fairly disastrous results, watching your AWS bill is a real consideration when doing performance testing and a metric you should definitely consider measuring, atleast keeping an eye on it!

As you can imagine, hit it too hard and it can lead to fairly disastrous results, watching your AWS bill is a real consideration when doing performance testing and a metric you should definitely consider measuring, atleast keeping an eye on it!Lambda Configuration

Lambdas have several configuration options that can impact performance. Below are the things you can edit in the AWS Console I'll focus on these because they have significant impact on performance.

This isn't a definitive list but should cover most cases of why Lambdas have poor performance:

- Memory: Determines both the memory allocation and CPU power available to the function. More memory typically results in faster execution.

- Timeout: Sets the maximum duration a Lambda function can run. Adjust appropriately for the work being processed to avoid unnecessary time limits.

- Concurrency Limit: Controls how many instances of a function can run simultaneously, this is different from provisioned concurrency. This is just a hard limit of the amount you can use.

- Provisioned Concurrency:You have 1000 concurrent executions of lambdas, here you can provision a set amount warning: you will get UP to the number, you won't overflow into the 1000 so be careful with this setting or prepare to be throttled

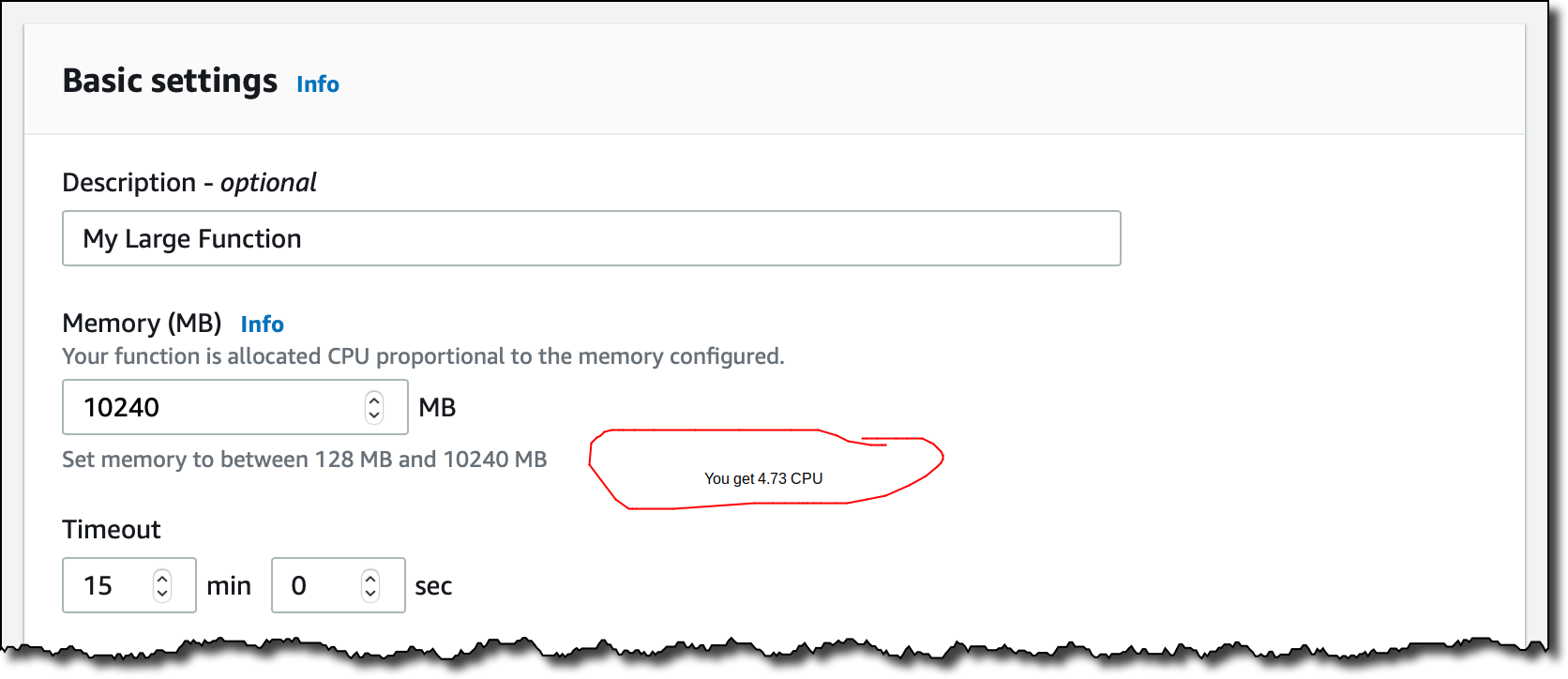

Memory

Oh boy, my pet peeve with AWS lambdas is this - More memory is just more RAM right? No, since this is where the CPU setting is, and that's probably not the only thing. Here lies the equivelant of an Ec2 specification.

Honestly if I could give AWS one piece of feedback, it's this. Tell me what CPU I'm getting and a detailed spec of what my runtimes looks like! Maybe it's since it's VCPU No idea!

A handy cheatsheet can be found here, that has a table of X Memory = X CPU

Increasing the Memory

So you've decided you need a beefier lambda, the default is 128mb PUNY your hello world app clearly needs 4GB of memory.

One things to consider with Lambdas is time, you are billed for the duration of usage as well as power, so in some sense adding more power can actually reduce costs, more power = less time taken = less time needed for that machine = less cost

That has diminishing returns keep in mind, your hello world app wont benefit from the 4gigs of ram, so you'll loose money in that scenario but commonly it's cheaper (and faster!) to use a beefier lambda.

Tools exist that can help you calculate the most cost efficient power settings exist albeit common sense is usually the quickest and most efficient way to choose memory, general rule if it seems really slow definitely play around with the setting!

Timeout

You can configure a Lambda timeout, that's a good one to make sure your AWS bills don't blow up but they have a maximum of 15 minutes.

It's understandable why this exists but it presents one of AWS lambdas biggest weaknesses.

Lets say you have a daily task to go through a list of records in yout database and do a 3rd Party check on them. Cool that takes 5 minutes at 1769MB of RAM, cool. Then you scale up, oh now there's 10x the users and suddenly that job takes over 15 minutes, oh dear. The job will stop and die before it finishes, and you end up vertically scaling! Yuck.

Now you could have made the argument to plan that in from the start but software planning is difficult, time-consuming and ultimately runs the risk of going nowhere slowly. Now sometimes it's obvious, if you know it's going to take an hour to run don't start with a lambda but mistakes happen.

There's a few way to counteract this if you end up in the situation, but it's not a fun problem to have here's a few things both pre-meditative and what you can do if your find yourself in the situation:

- Cloudwatch Alarms, setup an alarm to raise when any job goes over 5, 10, 12 minutes. This might give you time for an architectural re-think

- Switch to using a dedicated server, ECS or EC2 will work, obvious implication on the cost with this one, use this one if your not upset about loosing your serverless badge

- Chunk up your job, Lambdas can run in parallel so diviying up the task and use something like a Message Queue can work

Lambda timeouts are an interesting topic, and when designing a task or action as a lambda ask the question 'Will this ever take over 15 minutes?' this foresight could save you!

Concurrency

Concurrency is the amount of lambdas at once you can have running, important note with this one is that if you go over. The lambda will reject you and throttle, these can come up when load or stress testing.

By default you can have 1000 Lambdas executing at once, that sounds like a lot but on large scale systems, its feasible you can breach that limit. You can dedicate a maximum for a single lambda up to your limit, this can be a good safety net incase of DDOS to save you some money.

Of course if your ever stress testing or chaos testing, observing system behaviour here may be important.

Performance Testing Lambdas

The first decision you need to make is how you will invoke the lambdas, either directly or via a configured API Gateway. The aforementioned lambda tuner is quite a good tool to performance test Lambdas directly.

If your like me and are dealing with a complex CRUD application using the API Gateway any common API performance testing tool will suffice. (Please dont use JMeter.)

Measuring Lambdas

Unfortunately you always need to test software performance to make sure it's actually performant. Time to dig into that then!

What to Measure

- Invocation Errors: Count the number of errors occurring during Lambda invocations.

- Concurrency: Monitor how many requests the Lambda function can handle simultaneously.

- Memory Usage: Measure the memory used by the Lambda function during execution.

- Execution Duration: Measure the time the function takes to execute the business logic.

- Cold Start Duration: Measure the time taken for AWS Lambda to initialize during a cold start.

- Request Latency: Track the end-to-end latency from when the request is made to when the response is received.

Cold Starts

Let's dive into an aspect I haven't discussed yet: Cold Starts. They're a more significant issue for Lambdas than traditional servers because each lambda runtime starts from fresh. Cold starts mean functions that heavily depend on state can face performance bottlenecks. So if you rely on an app that needs heavy usage of memory consider alternative options

Reporting and Evidence

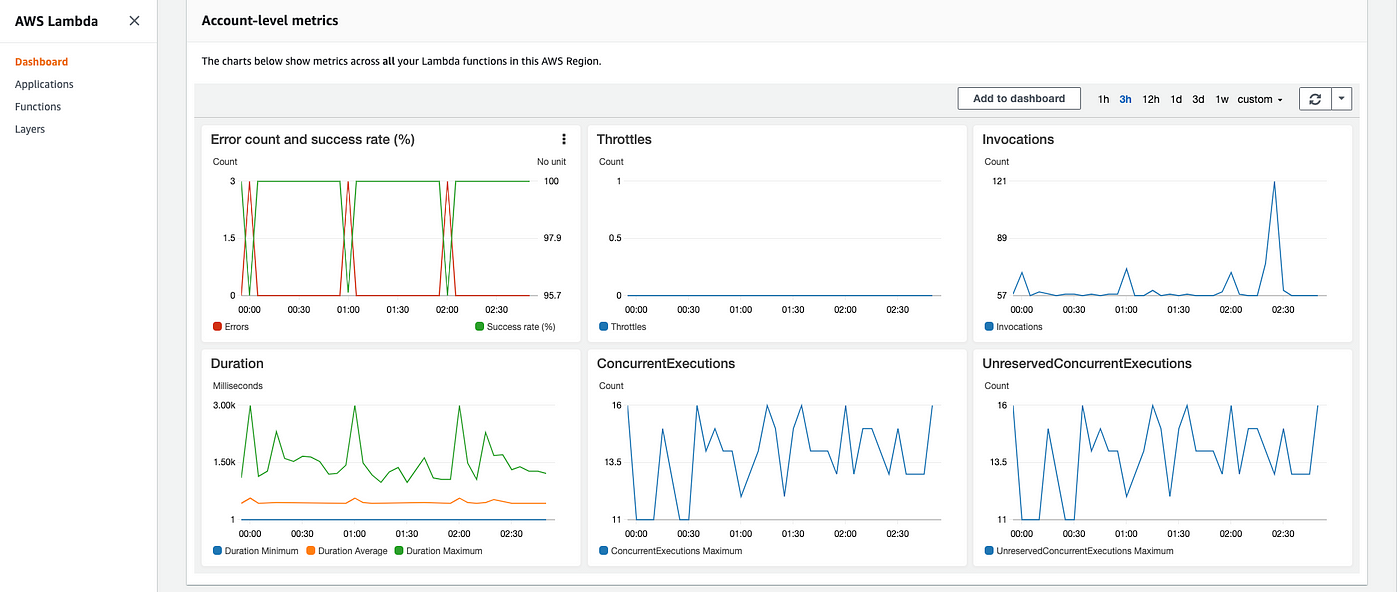

Providing evidence of lambda performance is actually one of the easy parts of using Lambda .

AWS Lambda Dashboard

The default Lambdas dashboards are great!

No setup required, want proof of good performance just send a link to this, it makes assessing performance easy. (And one of the reasons to use serverless!)

Steampipe

I've been using Steampipe recently, another great tool to look at. You can query lambdas individually for there statistics and with larger AWS estates can more easily spot misconfigured lambdas. And given that the results are often SQL you can do some pretty nifty aggregations too! Steampipe you can write queries like below to pull out all the lambda configurations in a view:

SELECT name,

memory_size,

timeout

FROM aws_lambda_function

In Conclusion

AWS Lambdas are not a miracle solution to the server maintenance problem, there's a whole ton of thought and configuration required. So when using serverless beware!